Computers. The world is run by them and like it or not, they are fast becoming – or in some instances, have already become – the crutch on which we are increasingly dependent. They are now purely and simply a part of everyday life, a life that would be so much more difficult if we did not have them.

There are many people who can remember, not so very long ago, when everything in the office was hand written and more than likely had to be done so in triplicate. Do we really concede that this was a better time and that things were done in a more efficient manner with people using their grey matter?

From the very first time man found that a simple machine could greatly improve his effectiveness in the workplace, the scenario for perpetual improvement was set. It was only ever going to take someone with the ambition and foresight to see that machines could only make life easier and the race was on.

For hundreds of years, people toiled with mathematics and binary systems in an effort to be the first to create the basis of what we have today. As clever and as forward thinking as these people doubtless were, it wasn’t until the start of the 1940s that the race began to hot up and things really started to take shape.

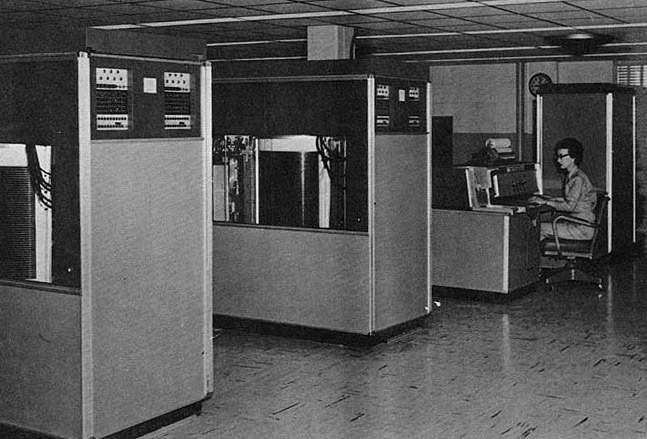

It’s important to remember that back in the 1940s, any type of machine – regardless of its use – was going to be a bit on the large size. If you consider that the first incarnations of what we have today took up every inch of space in the rooms in which they were built, add to this the fact that they consumed as much power as many hundreds of todays PCs would and you begin to see the problem.

Image: Wikipedia

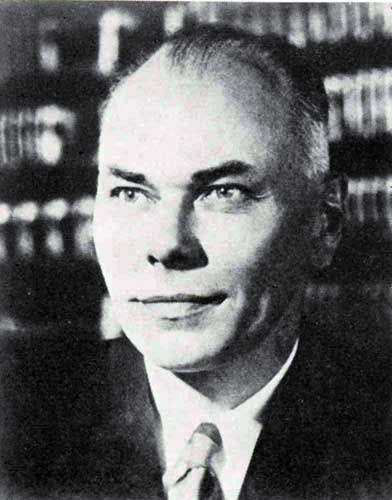

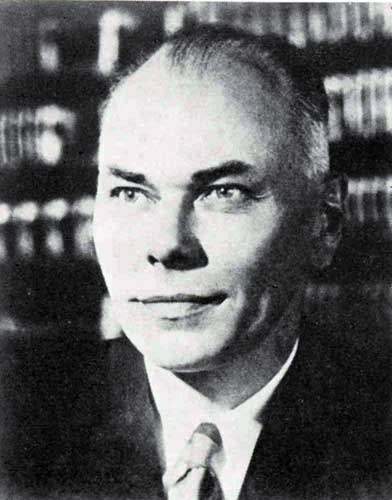

In February 1944, Howard Hathaway Aiken completed development of the Harvard MKI and installed it in the university after which it was named. This innovative prototype was inspired by the difference engine built by Charles Babbage a century earlier.

Image: user:geni (Wikipedia)

To put this computer into some sort of perspective, this original behemoth was around 50 feet long and 8 feet in height. Inside, it had somewhere close to three quarters of a million parts, 500 miles of wiring and it used mechanical switches to open and close the electric circuits – but it was a move in the right direction.

Aiken continued to improve upon his initial success, working on a MKII and MKIII version. By the time he finished the fourth incarnation in 1952, which was built for the US Air Force, it was all electronic, but unfortunately it had taken him too long.

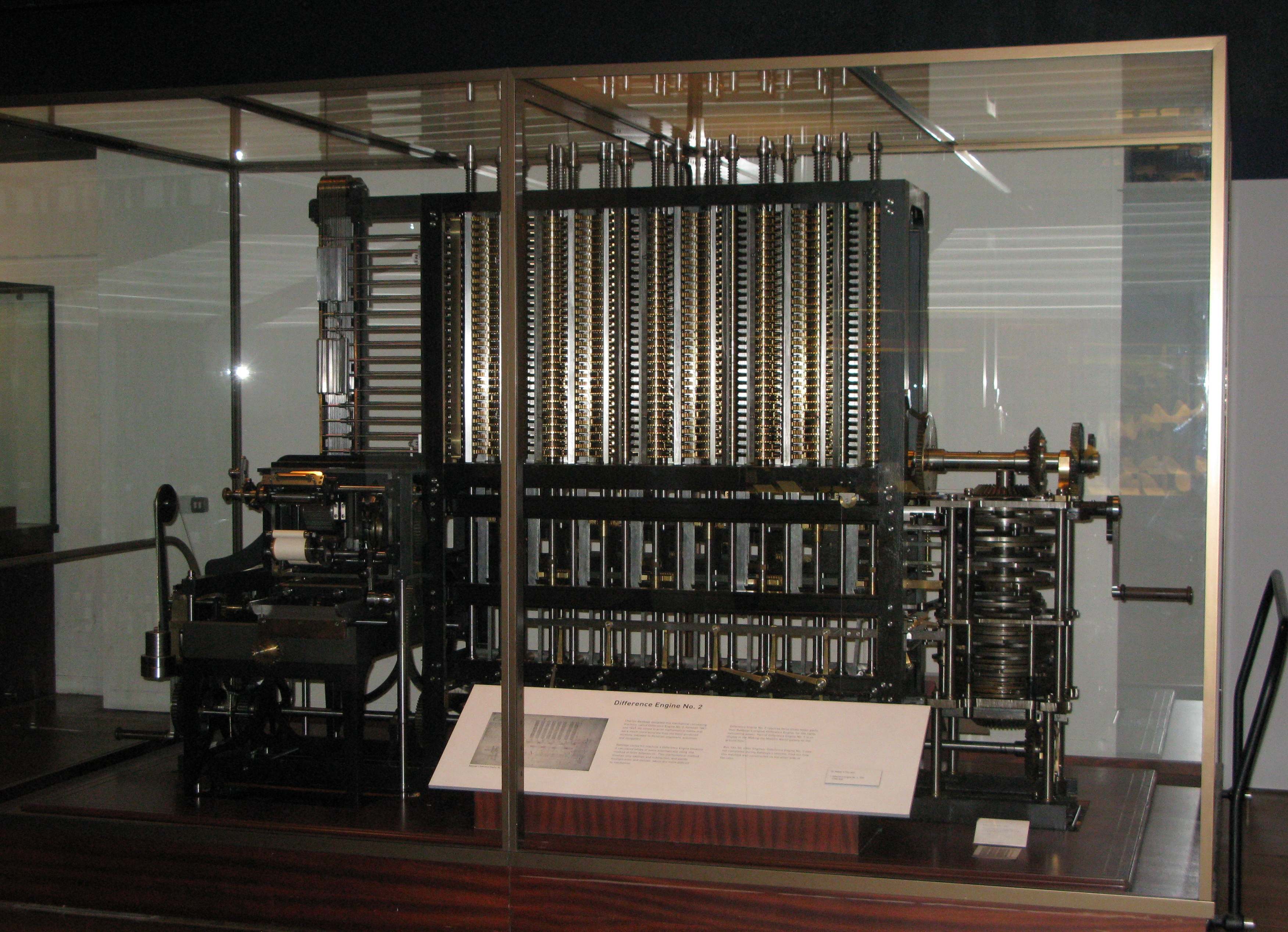

In 1946, six years before Aiken’s MKIV, J.Presper Eckert and John Mauchly built the first ever all electronic computer that was called the ENIAC. The Electronic Number Integrator and Computer took the game to a whole new playing field in every respect.

Image: U.S Army (Wikipedia)

To start with, it was 1,000 times faster than Aiken’s MKI and inside it used in excess of 18,000 vacuum tubes as opposed to mechanical switches. It was a staggering 100 feet long and 10 feet tall and could do in 20 seconds a math problem that it is estimated would have taken one man 40 hours to complete, which in itself is pretty impressive for the time.

Although a greatly improved machine, it still had the problem whereby it could only perform one specific task before it had to be rewired in order to perform a different one. This seemed to be the next hurdle that these pioneers needed to get over, but it wasn’t long before someone came up with the answer.

Image: Wikipedia

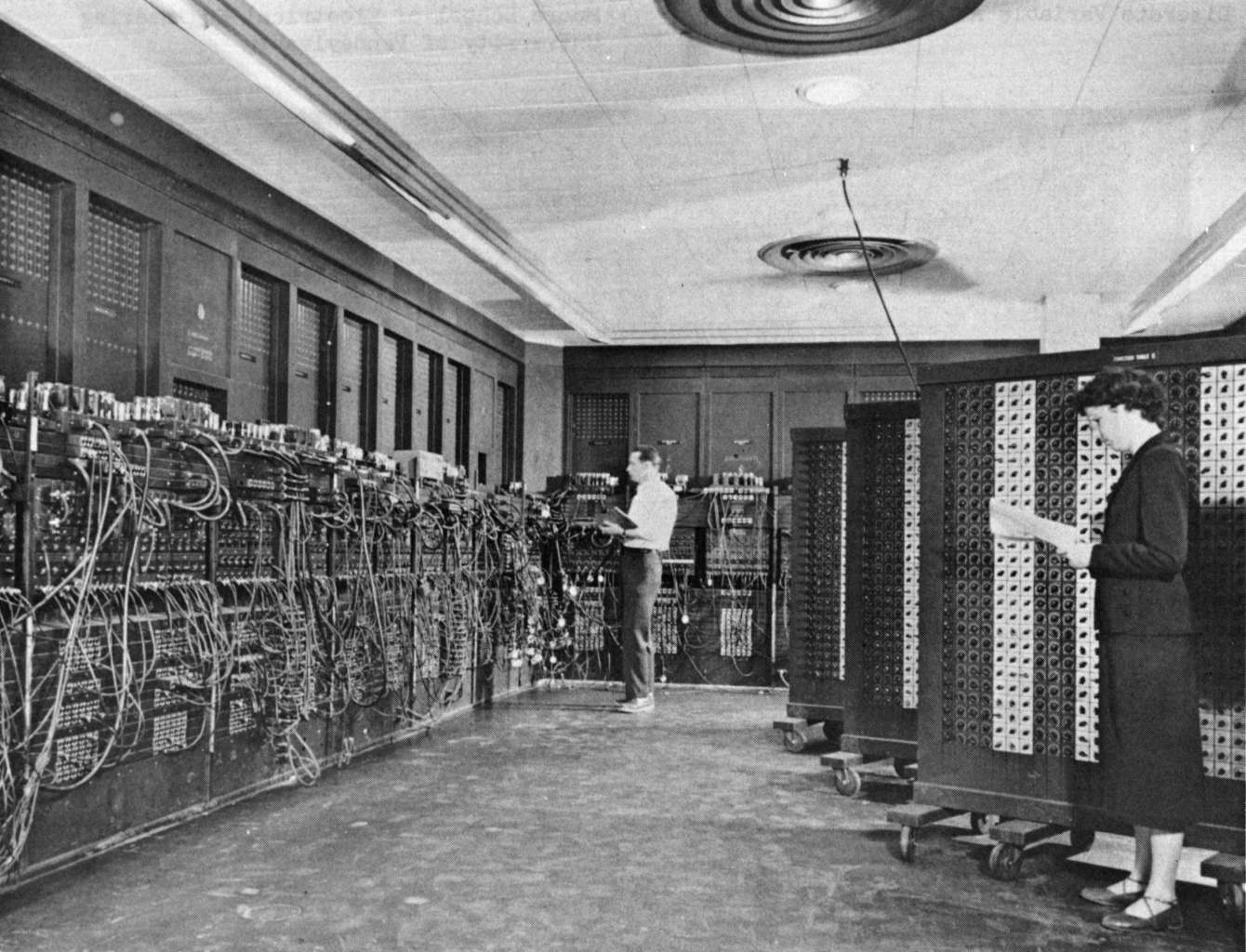

Enter John von Neumann, a Hungarian-American who is widely regarded by those qualified to comment as one of the greatest mathematicians in modern history. In one fell swoop he cleared the hurdle, taking the problem out of the equation and giving the game the shot in the arm that it needed.

Neumann’s development – software written in binary code – meant that data and instructions could be stored within the computer, leading him to build the EDVAC in 1950 using his system and the Electronic Discrete Variable Computer actually stored the different programmes and instructions that the computer could carry out on punched cards.

The following year, Presper Eckert and Mauchly took the advantage again with the production of the first commercial computer, the UNIVACI. Machine size was slowly but surely coming down, but by far the biggest improvement was the technology within, which was coming along in leaps and bounds.

The UNIVACI had an internal storage capacity of 1,000 words or 12,000 characters and for the time, fantastic processing speeds. It was also the first computer to use buffer memory, a temporary spot that holds data until it can be processed, as well as coming equipped with a magnetic tape unit.

In April 1952, IBM, now one of the world’s leading technology giants, introduced to the world the 701, their first commercial scientific computer, quickly followed the next year by its sibling the 650. This versatile system, announced in 1953, sold over 2,000 units between 1954 and 1962 and was popular for teaching high school students computer programming

Image: RTC (Wikipedia)

A few years later in 1957 saw the installation of IBM’s first 305 RAMAC (Random Access Method of Accounting and Control), one of the last vacuum tube computers that they would build. It was capable of storing an incredible 5,000,000 8 bit characters, which helped this machine’s production pass the 1,000 mark.

September 12th 1958 saw the first demonstration of what could be considered a huge turning point in the perpetual evolution of the computer, as it was the day that Robert Noyce and Jack Kilby introduced the world to the Integrated Circuit (IC).

The IC had been developed as a concept over six years earlier by a British radar engineer by the name of Geoffrey Drummer, but Noyce and Kilby successfully furthered the concept. These tiny packages that would ultimately be packed with electronic components would turn out to be the all important building blocks of computer hardware and a new way forward.

As the swinging 60s rolled in, IBM unveiled Stretch (or the 7030, to give it its full title), the first transistorized supercomputer. The fastest in the world for three years, the original purchase price of it was amazingly over $13 million, but as it failed to hit its purported performance target, this was reduced to under $8 million.

Things seemed to be moving along nicely and in March 1965, Digital Equipment Corporation brought out the 12 bit PDP-8. This was the first commercially successful minicomputer, which amazingly, at a cost of $20,000, sold more than 50,000 systems, far outstripping anything else at the time.

The PDP-8 family of computers proved so successful that variations and upgraded models kept it going through to the late 1970s, reflected in the fact that between 1973 and 1977, it was by far the best selling computer in the world.

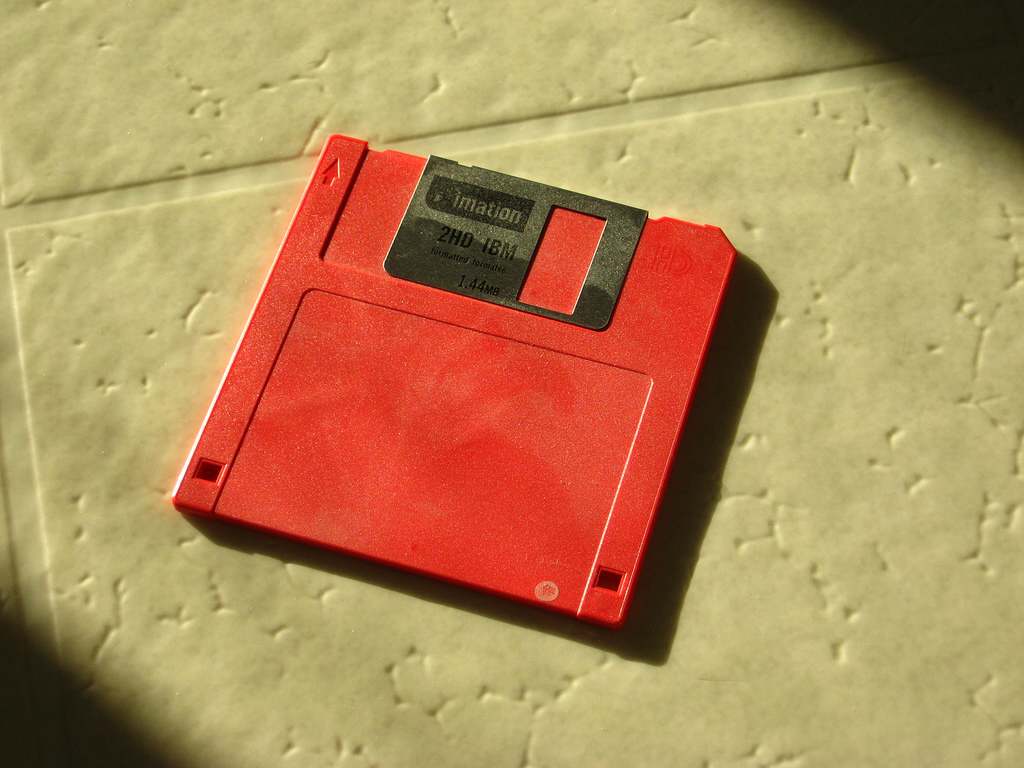

Image: 92wardsenatorfe (fotopedia)

A great decade for computer development, the 1970s also heralded the introduction of the ubiquitous floppy disk, the form of data storage that miraculously was still in use as the 21st century dawned.

The 1970s was also the decade when personal computers began to take off in a big way, with models like the Commodore PET and Apple II, which would be connected to the family TV set.

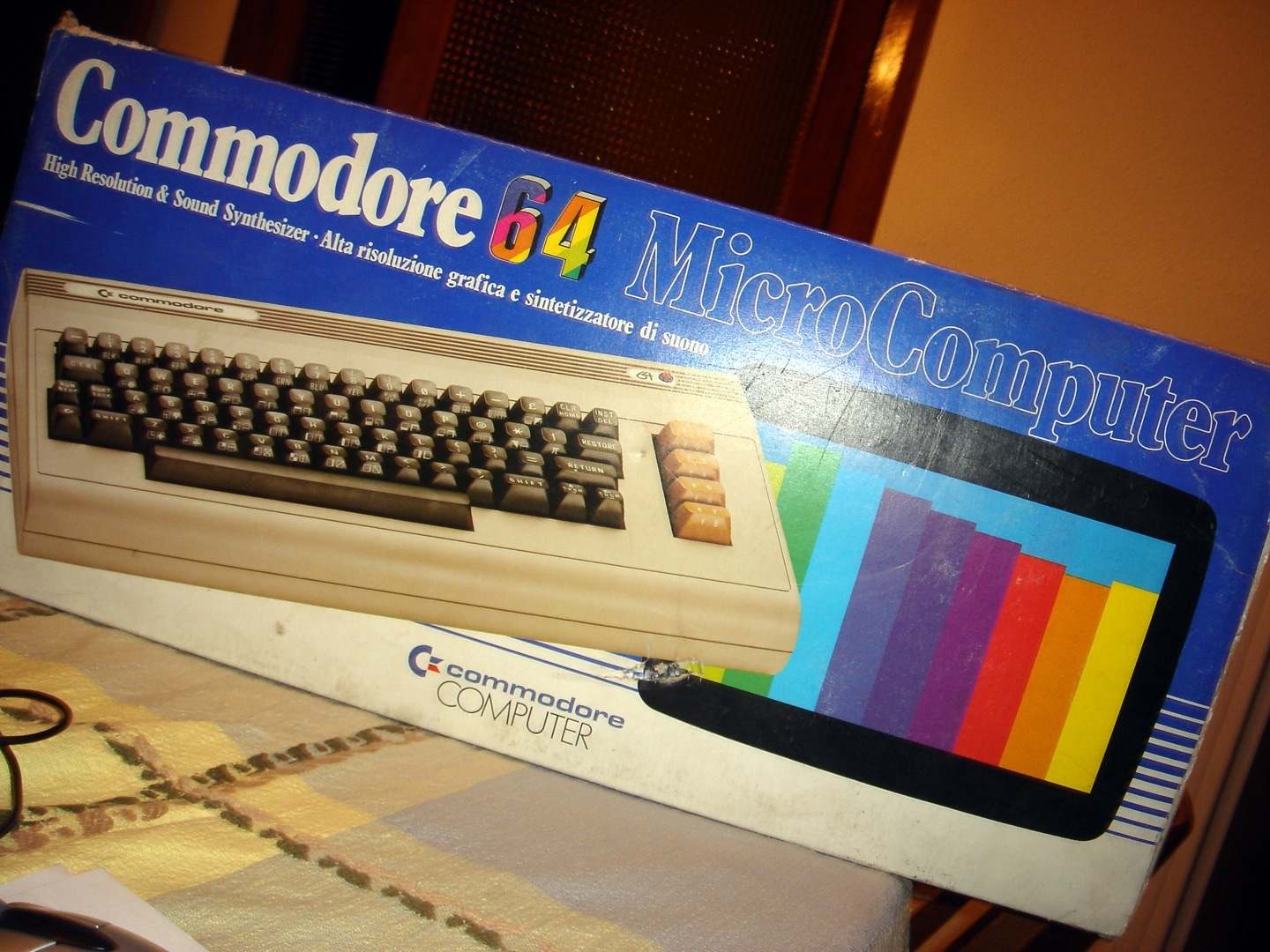

Image: JaulaDeArdilla (fotopedia)

As the decade ended and the 1980s emerged, this type of home computer fun was all the rage, with such machines as the Sinclair ZX Spectrum, Atari 800XL and the Commodore 64 all being introduced. By the time 1982 came around, it was estimated that even at an average cost of over $500, there were more than 620,000 home computer systems in the United States alone.

On November 20th 1985, nine years after registering the company name, Microsoft launched the first retail version of their operating system, Microsoft Windows. This was to be the start of something that would grow to be bigger than anyone could possibly have anticipated and is still ever popular today.

After this point, it almost appears as if someone flicked a switch and the whole world went computer crazy. Big, multinational companies were bending over backwards to outdo each other and be the first to get the next ‘big thing’ to market, which meant that technology development just seemed to explode.

Computers were becoming more people friendly in both size and price, but the big learning curve carried on regardless.

The 1980s were a time when the main things you needed to worry about with your computer were either ending up with a screen burn-in or the dreaded power surge. A good quality protected socket strip would solve the latter, but the former was going to take a little more imagination.

Berkley Systems released the answer to the problem in 1989 and it was of course the after dark screensaver. Originally produced for Mac, it proved so popular that it was very soon made available to Windows, resulting in the birth of their ‘Flying Toasters’.

A mega transitional period in terms of technology beckoned during the 1990s, with new ideas being introduced almost weekly. It brought about the eagerly anticipated release of Windows 3.1 in March 1992, followed by upgrades and eventually Windows 95 and 98.

Image: Marcin Wichary (fotopedia)

Before the first releases of Windows 95 and 98, there was another huge turning point in computer history, as 1993 was the time when the public were introduced to a little something which was ultimately to have a resounding effect on most people. Known as the World Wide Web, only three years later we saw the launch by Jack Smith and Sabeer Bhatia of their web based e-mail service Hotmail, which was an instant success.

By the time we reached 1998, the internet had exploded and Hotmail had grown so exponentially that it had an estimated 40,000,000 happy users. This success brought the company to the attention of the now mighty Microsoft, who paid in excess of $400,000,000 for it, but it proved to be a great acquisition as, since the sale, there have been more than one billion Hotmail accounts created.

As the new Millennium began, there was no visible let up in the torrent of new programmes or technological advances and there were great developments in terms of the aesthetics of the computer and its size – looking at varieties such as laptops and tablet PCs – as opposed to what they can actually do.

Image: viagallery.com (fotopedia)

Technology is moving so fast that it seems it is increasingly difficult to keep abreast of it. It has now long been a belief that it is almost as if as soon as you buy a computer today, by the time you get it home and up and running, the odds are there will be a newer, more up-to-date model available.

And although this is unthinkable when compared to computers of little over 50 years ago, it’s proof that not only do we love computers today, but we see them as an integral part of our everyday lives.